Lessons from applying the EU AI Act to an industrial application

By Christian Agrell

The EU AI Act, the first comprehensive law on artificial intelligence, is now in effect. Prohibited AI was banned earlier this year, and companies face regular milestones until full implementation in 2027. With around 90,000 words and eight appendices, the Act is complex. Non-compliance will result in significant fines.

In an industrial context, applying the EU AI Act reflects the complexities of the processes it seeks to regulate. Judging an AI system against the Act’s criteria requires knowledge of the AI in the system as well as the industrial process or asset it affects, legal knowledge and assurance expertise. This is a challenge that sits comfortably within DNV’s history of managing risk, understanding technologies, and how they sit in regulatory frameworks.

To demonstrate our ability to guide our customers' compliance with the EU AI Act and share key learnings, we set up a working group consisting of eight experts to assess one of our own tools in accordance to the legislation. Smart Cable Guard (SCG) is a technology designed to monitor the condition of medium-voltage power cables, detecting and locating faults and weak spots. This system, which uses AI to analyse signals from the cables, provides actionable insights to customers, helping to prevent potential cable system failures and making maintenance more effective. Across various industries, predictive maintenance has emerged as a compelling application of AI, enabling systems to determine optimal times and locations for inspections or repairs. At the same time, understanding how the AI Act will affect such systems is challenging. We chose the SCG use case to give us an opportunity to dive directly into this challenge.

All companies with AI operating in the EU will be required to ensure they are compliant to the Act. For companies embarking on this process, particularly those operating in the industrial context, these learnings will help form best practices. What is AI?

This is the first question that should be asked when undertaking this assessment. If it can be argued that a tool is not AI then it will not fall under the Act.

For this exercise, it is only the EU Act’s definition that matters. It says:

“AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

This is a broad definition. In the case of SCG, it has two functions: 1) detection of weak spots (partial discharge) and 2) detection of faults. The technology SCG uses for each of these functions required careful assessment against the Act’s definition of AI. SCG contains digital components that are clearly AI (like machine learning models) as well as algorithms that require more through assessment against the AI definition (like the implementation of simpler statistical rules). Ultimately, given the Act’s broad definition, we identified that SCG contains two elements of AI. The process also highlighted how one function can contain more than one AI.

Assessing what is high risk

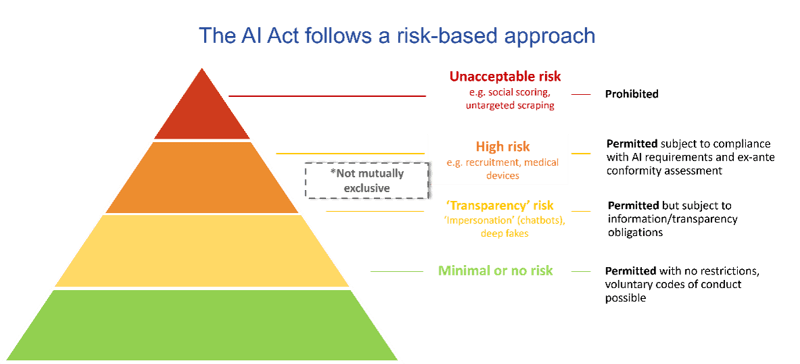

The Act takes a hierarchical approach to risk. The four categories are unacceptable risk, high risk, ‘transparency’ risk, and minimal or no risk.

The AI on SCG serves two purposes. The Partial Discharge (PD) detection and location, which detects areas of cable which are at risk of failing, whilst the fault detection component reports failures on the cable. The question we investigated was: can SCG be classified as a high-risk AI system or not? For this to be the case we had to assess whether the use of the data from SCG (with AI inside) directly puts at risk the life, health or safety of persons or property.

As SCG is used in the context of power grid operation, it can fall under the Act’s Annex III “safety component of critical infrastructure”. The definition of “safety component” is also broad and covers more than just the technologies that have a safety function. For SCG, and more generally solutions for predictive maintenance of critical infrastructure, the AI Act risk classification will depend on how the technology is being used.

Currently, SCG is intended as an advisory tool. By analysing how the technology is used (human-machine interaction) and the associated risks, we could conclude that SCG falls in the transparency risk category.

Next steps

We classified SCG in transparency risk category, which still carries actions to be carried out.

The classification of SCG at this level is dependent on it being used as an advisory tool, which is its intended purpose.

Demanding on time and expertise

The team of eight who ran this project consisted of experts in energy, AI, law and assurance. As we were running this as a training exercise, we deliberately chose a large group to facilitate diverse viewpoints and robust discussions.

Our experience demonstrates that the decision-making process is not necessarily as clear as imagined at the outset and we would advise companies to think about the EU AI Act sooner rather than later. With this exercise we were also able to develop tools and internal guidelines to streamline and speed up this form of assessment for new use cases in the future.

Conclusion

For industrial use of AI that may be classified as high-risk, the route to compliance can be a complex one. Companies should acknowledge this from the outset and equip themselves with the required expertise, tools and timeframe to meet the requirements of the EU AI Act.

Whilst the EU AI Act is a question of compliance, it should also spark a deeper conversation about the importance of safe AI, especially in industrial settings. Our recommended practices for safe AI are designed to help companies develop safe systems. We at DNV will continue to probe best practices in this field, so please reach out to talk to us.