Introduction to the EU’s AI Act: What you should know

This article provides an initial overview of the European Union’s Artificial Intelligence Act (AI Act). It outlines key application timelines and explores the potential implications for organizations.

What is the AI Act?

The AI Act is an EU regulation that, in simple terms, is relevant for organizations providing or using AI systems within the EU. Most provisions of the Act are expected to become applicable in August 2026, with some already in effect. The primary goal of the Act is to promote the uptake of human-centric, trustworthy AI while protecting health, safety, and fundamental rights. Compliance will be supervised by various national authorities across the EU and by the AI Office, among others, and details will also be provided through several EU-level bodies, such as the AI Office and the AI Board. The law is complex, comprising 180 recitals, 68 definitions, 113 articles, and 13 annexes.

To give a quick overview, the Act’s primary goals are to:

- Promote the uptake of trustworthy, human-centric AI

- Limit the harmful effects of AI systems

- Support innovation

- Ensure a high level of protection for health, safety, and fundamental rights.

The AI Act represents one of the most significant steps the EU has ever taken in regulating technology. It seeks not to restrain innovation, but to foster the growth of responsible, people-focused AI. As it lays out the minimum requirements for placing in-scope AI systems on the EU market, it is absolutely crucial for businesses to pay attention to it now rather than later.

What is the definition of an AI system under the Act?

The Act applies to systems meeting the definition of an “AI system”. If we simplify a bit, an “AI system” refers to any machine-based setup designed to work autonomously and that infers how to generate outputs — sometimes learning and adapting after deployment. These systems do not simply follow a fixed program; they can generate results such as predictions, content, recommendations, or decisions, and their output can influence the digital or physical world. The Act also regulates certain obligations for general-purpose AI models.

For those interested in more technical details, the EU Commission has published comprehensive guidelines that further clarify what qualifies as an AI system under the Act.

Does the AI Act apply to me?

The AI Act is not limited to tech giants or niche AI startups. Its scope is broad. For instance, if an organization is developing and putting into service, selling, or using AI systems anywhere in the EU, it may fall under the Act’s purview. The following are the two most common roles defined in the Act:

Providers: This category encompasses any organization that builds and places AI systems on the EU market. Whether modifying code or releasing a system or a product using an AI system, organizations in this role could be considered providers. More specifically, a provider could be, e.g., any company, research institution, or individual in a professional context that develops an AI system or has developed one, then puts it into service under their own name or trademark or makes it available for use, directly or through distribution channels, within the EU. Providers are responsible for ensuring their AI systems comply with the Act’s requirements before entering the market, based on the risk classification of the AI system in question. For example, high-risk AI systems need to comply with requirements related to risk management, data governance, testing, and consider certain security aspects. This includes AI systems as standalone products or AI systems that are integrated into other goods or services.

Deployers: Organizations that use in-scope AI systems are known as deployers. This means that even if an organization is not inventing new algorithms but uses AI systems in day-to-day operations within the EU, it falls under the Act as a deployer. This includes businesses of all sizes, public sector bodies, non-profits, and individuals using AI systems in professional contexts. Examples are organizations integrating AI systems into products, services, or internal processes — such as banks using AI systems for credit scoring, retailers deploying chatbots, hospitals applying AI systems for diagnostics, employers using AI tools for facilitating recruitment procedures, or government agencies leveraging AI systems for executing their public tasks.

While the Act defines other roles, these two categories are most relevant for the majority of organizations.

It is important to note that there are carve-outs to the Act. It does not apply in full to all industries and all use cases of AI. For example, industries such as aviation, marine, or automotive may be subject to lighter or different requirements due to existing product safety regulations. The Act is broad, but not all-encompassing. Hence, organizations should ensure they understand their specific position.

The risk-based approach of the AI Act

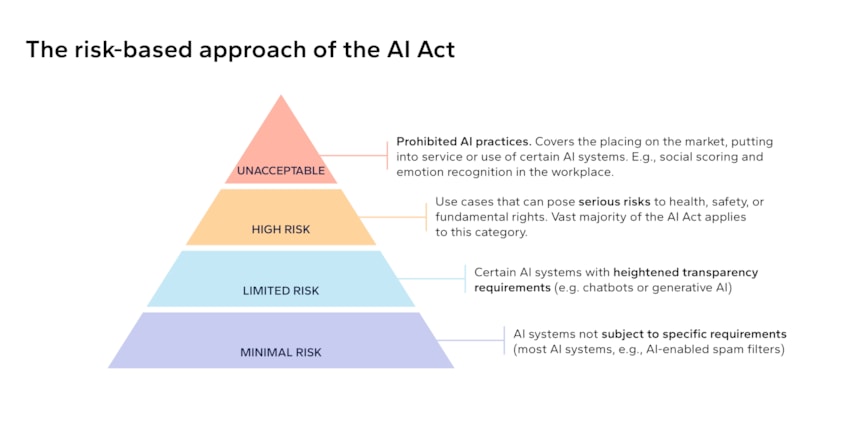

A cornerstone of the AI Act is its risk-based approach. Requirements vary depending on the risk posed by the AI system or its use case, as illustrated in the following image.

The strictest risk category is that of unacceptable risk. The Act prohibits AI practices falling into this category altogether.

The vast majority of the Act’s requirements apply to so-called high-risk AI systems. Examples of these requirements include implementing a thorough risk management framework, maintaining strict data governance and demonstrating high levels of accuracy and robustness. Robustness means the AI system should remain resilient against harmful or undesirable behaviour, and it must also ensure strong cybersecurity protections.

AI systems with heightened transparency risks — colloquially known as AI systems posing “limited risk” — are subject to specific transparency requirements.

Finally, AI systems posing minimal risk are not subject to specific requirements. Yet, providers and deployers of such systems should ensure sufficient AI literacy, and they are also encouraged to adopt measures designed to further the ethical and trustworthy uptake of AI.

What you need to do as a provider or deployer

Providers must create an inventory of all systems, products and services they provide – or are planning to provide – to the market, to determine which fall under the scope of the AI Act as an AI system or general-purpose AI model. Next, they must categorize these within the risk category scheme referred to above and ensure compliance with the requirements regulated for each category, along with other applicable requirements of the Act, such as AI literacy. For more information on AI literacy, read our recent article Why AI literacy matters now: The urgent compliance challenge for organizations.

Most of the provider requirements apply to providers of high-risk AI systems. Requirements include, among others, ensuring that the AI system in question fulfils all requirements imposed on high-risk systems, having in place a quality management system, ensuring that the AI system undergoes the relevant conformity assessment procedure, and registering the AI system as required by the AI Act. When incidents occur, especially those affecting health, safety, fundamental rights, property, or the environment, they must be reported in accordance with the procedures set out in the AI Act.

Deployers must also create an inventory of the AI systems in use and classify them into the four risk categories mentioned above, with support from the system providers. They must comply with all requirements in the AI Act affecting deployers, including transparency obligations regarding the deployment of limited-risk AI systems, and ensure that, when high-risk AI systems are deployed, the organization meets all requirements set for deployers of such systems. These requirements include maintaining continuous human oversight and exercising caution when inputting data into AI tools to ensure the required quality of such training data. In certain specified operations and contexts, deployers must conduct a fundamental rights impact assessment to mitigate potential risks. Finally, deployers are responsible for ensuring AI literacy, as noted earlier.

Security and governance requirements

The Act outlines explicit security requirements affecting those responsible for high-risk AI systems. Providers must ensure that accuracy, robustness, and cybersecurity are integrated into AI solutions at launch and maintained throughout their lifecycle. The AI Act recognizes that appropriate technical cybersecurity measures are dependent on the relevant circumstances of the AI system in question and the risks associated with it. Hence, technical measures designed to ensure cybersecurity must always reflect the risk landscape pertaining to the system at hand.

Where appropriate, the AI Act requires providers to implement technical measures against threats such as data poisoning, model evasion or adversarial attacks, and vigilance for emerging vulnerabilities. Both providers and deployers must have procedures in place for identifying, reporting and mitigating serious incidents. Draft Commission guidance recommends aligning incident reporting processes with familiar regulations such as CER, NIS2, or DORA when incidents do not directly affect fundamental rights.

On the governance front, managing the quality and integrity of data used for training AI systems is imperative. This extends beyond technical details, emphasizing organizational understanding of AI risks and responsibilities. Prioritizing AI literacy prepares teams to use these tools responsibly, identify issues, and respond rapidly if problems arise. By taking these actions, organizations help ensure compliance with the law and facilitate the safe, transparent, and trustworthy use of AI.

Timeline: When does the AI Act apply?

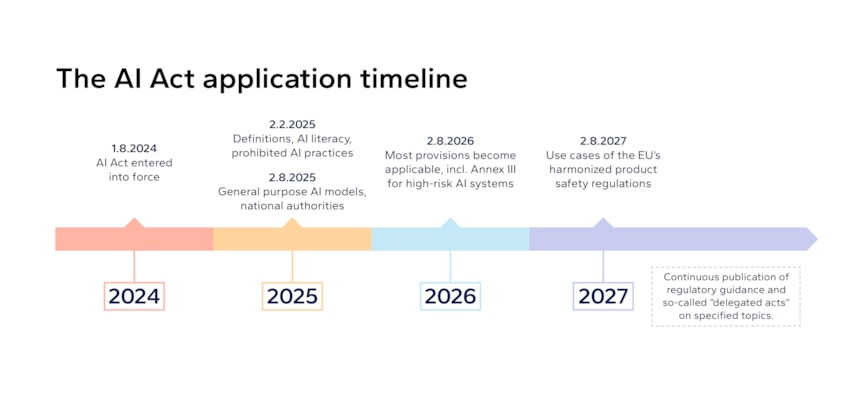

The AI Act entered into force in August 2024 and will become fully applicable in phases. Key milestones include the requirements related to AI literacy and prohibited AI systems, which came into effect in February 2025, the expected applicability of most provisions in August 2026, and further requirements for high-risk AI systems planned to become applicable in August 2027. Continuous regulatory guidance and delegated acts are expected to be published.

In summary, the AI Act introduces significant responsibilities for both providers and deployers of AI systems, with a strong emphasis on robust security, effective governance, and ongoing compliance.

By mandating these comprehensive measures, the AI Act aims to create a secure and trustworthy environment for the deployment and use of AI technologies within the EU.

These requirements not only protect data integrity and prevent misuse but also foster confidence in AI systems among businesses and consumers alike. Staying informed, fostering AI literacy, and establishing strong internal procedures will help organizations confidently navigate these new requirements and ensure the safe, ethical, and lawful use of AI technologies.

For comprehensive guidance or to discuss specific compliance needs, please contact Anna Rossi or Lucy Saim at DNV Cyber.

11/21/2025 2:54:00 AM